Mastering Audio Duration Detection in JavaScript Applications

Processing audio files dynamically with JavaScript can be a bit tricky, especially when working with raw data formats like WebM. One common use case is retrieving the duration of a raw audio file, but developers often run into issues where the "loadedmetadata" event fails to trigger. This can disrupt the process of properly extracting metadata, including the duration of the file.

In JavaScript, a common approach to load audio files involves creating an audio element and assigning the raw audio source via a Blob URL. However, WebM files with specific codecs, such as Opus, sometimes behave unpredictably during the loading phase, which prevents the loadedmetadata event from firing properly. As a result, the expected audio.duration value remains inaccessible.

This article explores how to accurately fetch the duration of a raw audio file using JavaScript. We'll walk through the challenges that can arise with the code you provided and provide suggestions to overcome them. By understanding the intricacies of the audio API and metadata handling, you’ll be able to integrate this functionality more smoothly into your project.

Whether you're building a web player or analyzing audio data in real-time, knowing how to handle these issues is essential. We’ll explore potential fixes and workarounds, ensuring that your code fires events as expected and delivers the correct duration information.

| Command | Example of Use |

|---|---|

| atob() | Converts a base64-encoded string into a binary string. This function is essential for decoding the raw WebM audio data provided as a base64 string. |

| Uint8Array() | Creates a typed array to hold 8-bit unsigned integers. This is used to store the decoded binary data of the audio file for further processing. |

| new Blob() | Creates a Blob object from the audio data. This allows raw binary data to be handled as a file-like object in JavaScript. |

| URL.createObjectURL() | Generates a temporary URL for the Blob that can be assigned to an HTML element, such as an audio tag. |

| loadedmetadata event | Triggers when metadata (like duration) of the media file is available. It ensures the audio duration can be accessed reliably. |

| FileReader | A browser API that reads files as text, binary, or data URLs. It allows raw audio files to be converted into formats readable by audio elements. |

| ffmpeg.ffprobe() | Analyzes media files on the backend and extracts metadata such as duration. This is part of the ffmpeg library used in Node.js. |

| Promise | Wraps asynchronous operations like ffprobe() in Node.js to ensure that the metadata is properly resolved or errors are caught. |

| new Audio() | Creates an HTML5 audio element programmatically, allowing dynamic loading of audio files from Blob URLs or data URLs. |

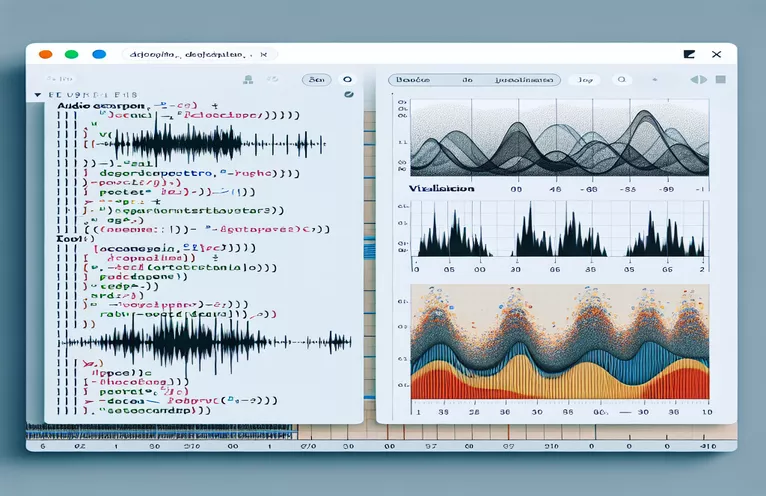

Analyzing and Retrieving Audio Duration from Raw WebM Files with JavaScript

In the first solution, we use the HTML5 audio element to load the audio data dynamically from a Blob. The process starts by converting the base64-encoded audio string into binary data using JavaScript’s atob() method. This decoded binary data is stored in a typed array of 8-bit unsigned integers using the Uint8Array() constructor. The array is then transformed into a Blob, which can act like a virtual file. This Blob is passed to the audio element via a Blob URL, making the audio data usable in the browser.

The next step is to bind the loadedmetadata event to the audio element. This event triggers once the browser has fully loaded the audio file’s metadata, allowing us to safely access the duration property. However, issues may arise if the audio format or codec (in this case, WebM with Opus) is not properly recognized by the browser, which is likely the reason why the metadata event failed to fire in the original implementation. The code ensures that if the metadata loads successfully, it logs the duration to the console.

In the second approach, we use the FileReader API to handle the raw audio data more reliably. The FileReader reads the audio Blob and converts it into a Data URL, which is directly assigned to the audio element. This method can prevent some of the codec compatibility issues seen in the first example. The same loadedmetadata event is employed to capture and log the audio’s duration. This approach ensures that audio files uploaded as Blob or File objects are correctly handled, providing more consistent results in various browser environments.

For server-side scenarios, we implemented a backend solution using Node.js with the ffmpeg library. The ffprobe function from ffmpeg analyzes the audio file and extracts metadata, including the duration, in an asynchronous manner. Wrapping this operation in a Promise ensures that the code handles success and error states gracefully. This approach is particularly useful for scenarios where audio processing needs to happen on the server, such as in file upload systems or media converters. With this method, we can retrieve the audio duration without relying on the client-side environment, ensuring greater reliability and flexibility.

Handling WebM Audio Duration with JavaScript: An In-depth Solution

JavaScript front-end approach using the HTML5 audio element with Blob handling

// Create an audio element and load raw audio data to get the durationconst rawAudio = 'data:audio/webm;codecs=opus;base64,GkXfo59ChoEBQveBAULygQRC84EIQoKEd2...';// Convert the base64 string into a Blobconst byteCharacters = atob(rawAudio.split(',')[1]);const byteNumbers = new Uint8Array(byteCharacters.length);for (let i = 0; i < byteCharacters.length; i++) {byteNumbers[i] = byteCharacters.charCodeAt(i);}const blob = new Blob([byteNumbers], { type: 'audio/webm' });// Create an audio element and load the Blob URLconst audio = new Audio();audio.src = URL.createObjectURL(blob);audio.addEventListener('loadedmetadata', () => {console.log('Audio duration:', audio.duration);});

Fetching Duration from WebM Audio Using FileReader

Using JavaScript with the FileReader API for better file handling

// Function to handle raw audio duration retrieval via FileReaderconst getAudioDuration = (file) => {const reader = new FileReader();reader.onload = (e) => {const audio = new Audio();audio.src = e.target.result;audio.addEventListener('loadedmetadata', () => {console.log('Duration:', audio.duration);});};reader.readAsDataURL(file);};// Usage: Call with a Blob or File object// getAudioDuration(blob);

Node.js Backend Solution for Audio Duration Extraction

Using Node.js and the ffmpeg library for server-side audio analysis

// Install ffmpeg library: npm install fluent-ffmpegconst ffmpeg = require('fluent-ffmpeg');const fs = require('fs');// Function to get audio duration on the backendconst getAudioDuration = (filePath) => {return new Promise((resolve, reject) => {ffmpeg.ffprobe(filePath, (err, metadata) => {if (err) return reject(err);resolve(metadata.format.duration);});});};// Usage: Call with the path to the WebM audio filegetAudioDuration('path/to/audio.webm').then(duration => {console.log('Audio duration:', duration);}).catch(console.error);

Advanced Techniques for Handling Audio Metadata with JavaScript

An important consideration when working with audio metadata is browser compatibility. Not all browsers support every audio codec or format equally, which can lead to issues when trying to access metadata from formats like WebM with Opus encoding. Modern browsers generally handle these formats well, but there are edge cases where using a fallback method, such as server-side processing, is required to ensure consistent behavior. Testing the audio format support beforehand is a good practice to avoid unexpected failures.

Another useful strategy is preloading audio metadata using the preload attribute in the HTML audio tag. By setting it to "metadata", you can tell the browser to only load the necessary metadata without downloading the entire audio file. This can improve performance, especially when working with large files, and ensures the loadedmetadata event fires reliably. However, even with this approach, network delays or cross-origin restrictions might cause issues, which developers must account for by implementing error handling mechanisms.

Finally, working with large-scale audio applications often requires asynchronous programming techniques. Using JavaScript’s async/await syntax with event listeners ensures that the application remains responsive while waiting for audio data to load. Similarly, modular code design helps developers manage audio playback, metadata retrieval, and error handling separately, which is especially valuable when building web applications that involve multiple media files. These practices contribute to more robust and scalable code, ensuring better user experience and maintainability.

Essential FAQs on Retrieving Audio Duration Using JavaScript

- How can I ensure the loadedmetadata event fires consistently?

- Using the preload attribute with the value "metadata" can help the browser load the required data upfront.

- What is the purpose of converting a base64 audio string into a Blob?

- It allows you to treat the raw audio data like a file, which can be assigned to an audio element for playback or metadata extraction.

- What can cause the audio.duration property to return NaN?

- This often happens when the metadata hasn’t loaded properly, possibly due to unsupported formats or codec issues in the browser.

- Is there a way to check audio format compatibility before loading a file?

- You can use the canPlayType() method of the audio element to detect if the browser supports a given audio format.

- Can audio metadata be extracted on the backend?

- Yes, using tools like ffmpeg.ffprobe() in a Node.js environment allows you to retrieve metadata such as duration on the server side.

Key Takeaways on Retrieving Audio Duration with JavaScript

The process of extracting audio duration involves understanding browser limitations, audio formats, and how to handle raw audio data with JavaScript. Using Blobs, audio elements, and events like loadedmetadata ensures metadata is accessed smoothly.

Additionally, server-side tools like ffmpeg provide a reliable fallback when browser support is inconsistent. By combining front-end and back-end solutions, developers can ensure accurate and seamless handling of audio files, regardless of format limitations or network issues.

Sources and References for Handling Raw Audio Files with JavaScript

- Explains the use of HTML5 audio API and metadata events for extracting audio duration: MDN Web Docs: HTMLAudioElement

- Covers how to handle Blobs and use FileReader for managing raw binary audio data: MDN Web Docs: FileReader API

- Describes working with ffmpeg for audio analysis in Node.js environments: ffmpeg: ffprobe Documentation

- Provides information on handling WebM and Opus codecs in browser environments: WebM Project

- General insights into handling cross-origin audio resources and browser limitations: MDN Web Docs: CORS