Automating SonarQube Report Management

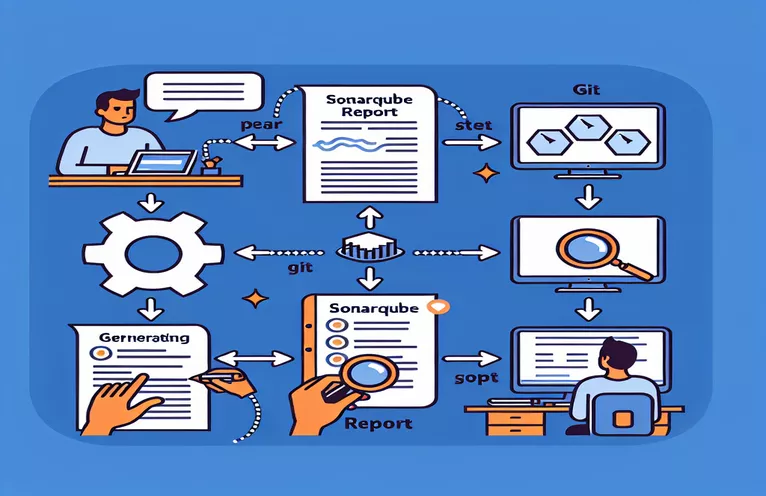

Overseeing the code quality of several microservices can be a difficult undertaking. This approach can be greatly streamlined by automating the download, storage, and commit of SonarQube results to a Git repository.

This tutorial will show you how to write a bash script that downloads 30 microservices' worth of SonarQube reports, puts them in a specified directory on a Linux server, and commits them to a Git repository. You will also learn the command to see these reports on your server by the end.

| Command | Description |

|---|---|

| mkdir -p | If a directory doesn't already exist, creates it. |

| curl -u | Executes an HTTP request with authentication in order to download files from a server. |

| os.makedirs | Recursively creates a directory in Python if it doesn't already exist. |

| subprocess.run | Executes a Python command with arguments and then waits for it to finish. |

| cp | Transfers folders or files between locations. |

| git pull | Pulls updates from a remote Git repository and merges them into the active branch. |

| git add | Updates the staging area with file modifications from the working directory. |

| git commit -m | Adds modifications to the repository along with a note outlining the alterations. |

| git push | Transfers content from a local repository to a distant repository. |

| requests.get | Sends a Python GET request to the given URL. |

Automating SonarQube Report Management

These scripts are made to automate the steps of receiving SonarQube reports for several microservices, saving them to a Linux server's designated directory, and committing the reports to a Git repository. To begin, the bash script defines the required variables, including the path to the Git repository, the SonarQube server URL, the token, the list of microservices, and the resource directory. If the resource directory does not already exist, it is then created using mkdir -p. The script iterates over every microservice, creates the report URL, downloads the report using curl -u, and stores it in the resource directory as a JSON file.

The script downloads the reports, modifies the directory of the Git repository, copies the reports into the Git repository, and runs a git pull to make sure it contains the most recent changes. After that, it uses git add to stage the changes, git commit -m to commit them with a message, and git push to push the changes to the remote repository. A similar set of activities are carried out by the Python script, which makes use of the os.makedirs function for creating folders, the requests.get function for downloading reports, and the subprocess.run function for running Git commands. SonarQube reports are methodically managed and stored thanks to this configuration.

Getting SonarQube Reports for Microservices and Saving Them

An Automated Bash Script for SonarQube Report Management

#!/bin/bash# Define variablesSONARQUBE_URL="http://your-sonarqube-server"SONARQUBE_TOKEN="your-sonarqube-token"MICROSERVICES=("service1" "service2" "service3" ... "service30")RESOURCE_DIR="/root/resource"GIT_REPO="/path/to/your/git/repo"# Create resource directory if not existsmkdir -p $RESOURCE_DIR# Loop through microservices and download reportsfor SERVICE in "${MICROSERVICES[@]}"; doREPORT_URL="$SONARQUBE_URL/api/measures/component?component=$SERVICE&metricKeys=coverage"curl -u $SONARQUBE_TOKEN: $REPORT_URL -o $RESOURCE_DIR/$SERVICE-report.jsondone# Change to git repositorycd $GIT_REPOgit pull# Copy reports to git repositorycp $RESOURCE_DIR/*.json $GIT_REPO/resource/# Commit and push reports to git repositorygit add resource/*.jsongit commit -m "Add SonarQube reports for microservices"git push# Command to display report in Linux servercat $RESOURCE_DIR/service1-report.json

Automating Git Tasks for Reports in SonarQube

Python Script for Git Report Management with SonarQube

import osimport subprocessimport requests# Define variablessonarqube_url = "http://your-sonarqube-server"sonarqube_token = "your-sonarqube-token"microservices = ["service1", "service2", "service3", ..., "service30"]resource_dir = "/root/resource"git_repo = "/path/to/your/git/repo"# Create resource directory if not existsos.makedirs(resource_dir, exist_ok=True)# Download reportsfor service in microservices:report_url = f"{sonarqube_url}/api/measures/component?component={service}&metricKeys=coverage"response = requests.get(report_url, auth=(sonarqube_token, ''))with open(f"{resource_dir}/{service}-report.json", "w") as f:f.write(response.text)# Git operationssubprocess.run(["git", "pull"], cwd=git_repo)subprocess.run(["cp", f"{resource_dir}/*.json", f"{git_repo}/resource/"], shell=True)subprocess.run(["git", "add", "resource/*.json"], cwd=git_repo)subprocess.run(["git", "commit", "-m", "Add SonarQube reports for microservices"], cwd=git_repo)subprocess.run(["git", "push"], cwd=git_repo)# Command to display reportprint(open(f"{resource_dir}/service1-report.json").read())

Boosting Automation via Cron Employment

Cron tasks can be used to further automate the download and commit of SonarQube findings. In Unix-like operating systems, cron jobs are scheduled tasks that execute at predetermined intervals. You can program the scripts to run regularly at regular intervals, like weekly or daily, by setting up a cron job. This will guarantee that your SonarQube reports are updated automatically and without the need for human interaction. The crontab -e command can be used to change the cron table and add an item that specifies the script and its schedule, which will establish a cron job.

This method lowers the possibility of missing report updates and guarantees total automation of the process. Log files can also be used to monitor the success or failure of cron job executions. You can generate an exhaustive log of all actions by include logging commands, like echo "Log message" >> /path/to/logfile, in your script. With this configuration, you may easily and dependable maintain your microservices' continuous integration and delivery (CI/CD) pipelines.

Common Questions and Answers

- How can I configure my script to run as a cron job?

- Using the crontab -e command, you may add a line containing the schedule and script location to create a cron job.

- What rights are required in order to execute these scripts?

- Verify that the person executing the scripts has the ability to read, write, and execute permissions for the folders and script files.

- How can I deal with script execution errors?

- Using if statements, incorporate error handling into your script to verify command success and log problems properly.

- Can I download using an application other than curl?

- Yes, you may download files using Python tools like wget and requests.

- How do I make sure my Git repository is constantly current?

- To fetch the most recent changes from the remote repository before committing new modifications, include git pull at the beginning of your script.

- Can these scripts be run on a timetable other than once a day?

- Yes, by changing the cron job entry, you may set the cron job schedule to run hourly, weekly, or at any other period.

- How should my SonarQube token be safely stored?

- Save your SonarQube token in a configuration file or environment variable that only you can access.

- Can I see the logs from when my cron jobs were executed?

- Indeed, you may make your own log file within the script or examine cron job logs in the system's cron log file.

- How can I be sure that the reports were downloaded correctly?

- To verify that the report files you downloaded are formatted appropriately, use the cat command to see what is contained in them.

Wrapping Up the Process

Scripts are written to download, store, and commit reports to a Git repository in order to automate the management of SonarQube reports. You can automate these processes and make sure the code quality of your microservices is regularly tracked and recorded by utilizing Python and bash. By adding cron tasks, you may reduce manual involvement by adding an additional layer of automation. Appropriate error management and logging increase the system's resilience. In addition to saving time, this method easily fits into your current CI/CD pipeline and offers a dependable way to manage SonarQube reports on a Linux server.