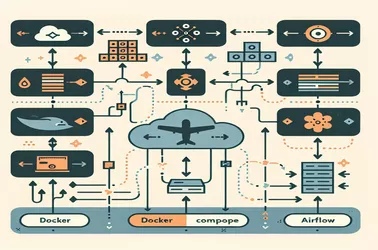

It can be difficult to manage dynamic task sequencing in Apache Airflow, particularly when dependencies must be created at runtime. A more flexible workflow is possible by utilizing dag_run.conf rather than hardcoding task associations. For data processing pipelines, where input parameters fluctuate often, this method is especially helpful. By using the TaskFlow API or PythonOperators, workflows can adapt based on external triggers. Dynamic DAGs provide a scalable option for contemporary data operations, whether they are handling diverse datasets, automating ETL pipelines, or streamlining task execution.

Alice Dupont

11 February 2025

Generating Dynamic Task Sequences in Airflow Using Dag Run Configuration