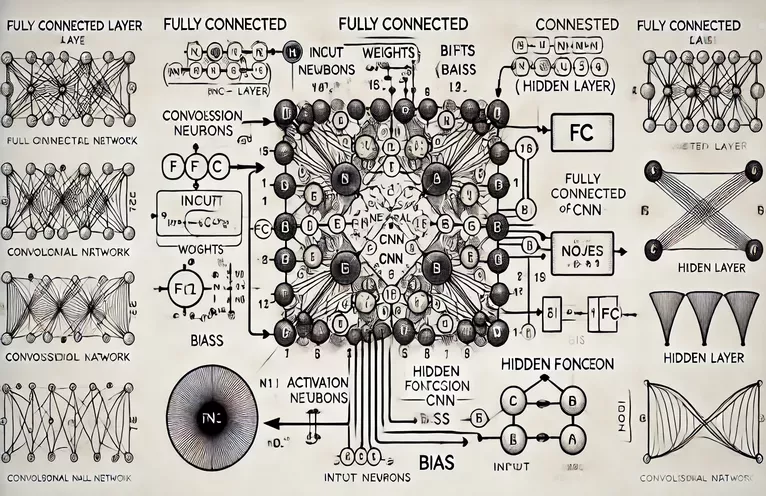

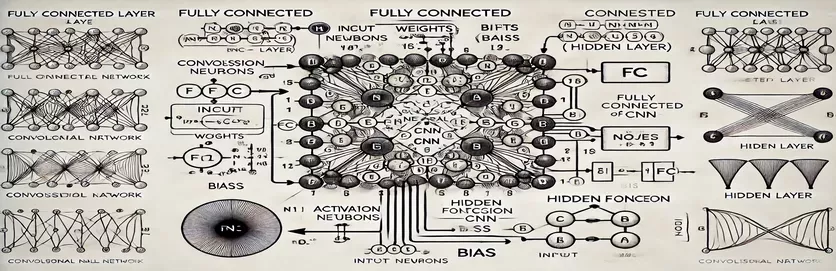

Demystifying Fully Connected Layers in CNNs

Understanding the workings of a Fully Connected (FC) layer in a Convolutional Neural Network (CNN) can feel like unraveling a mystery. For many, the complexity lies in the calculation process and how one node in the hidden layer is derived. Unlike traditional Artificial Neural Networks (ANNs), the FC layer in CNNs has nuances that often go unexplained in tutorials. If you're puzzled about this, you're not alone!

Many resources skim over this topic, leaving learners without clear guidance. Tutorials often recycle incomplete explanations, adding to the frustration of those who seek clarity. If you've found yourself repeatedly searching for answers, you're in the right place. 🧩

In this guide, we’ll focus on calculating a single node from the hidden layer of the FC layer. Once you grasp the mechanism for one node, you'll be equipped to tackle the rest. By breaking this process into clear, actionable steps, you’ll gain the confidence to navigate any FC layer calculation.

Using relatable examples and a straightforward diagram, we'll illuminate the pathway from inputs to outputs in the FC layer. Say goodbye to confusion and hello to understanding—let's dive in! 🚀

| Command | Example of Use |

|---|---|

| np.dot() | Performs the dot product between two arrays. Used here to calculate the weighted sum of inputs and weights for a node in the fully connected layer. |

| np.maximum() | Applies the ReLU activation function by selecting the maximum value between the calculated output and zero. |

| torch.tensor() | Creates a tensor in PyTorch. Tensors are the basic building blocks for data representation in deep learning frameworks. |

| torch.matmul() | Performs matrix multiplication in PyTorch. Used to calculate the dot product between inputs and weights. |

| torch.nn.functional.relu() | Applies the ReLU activation function in PyTorch, setting all negative values in the tensor to zero. |

| np.testing.assert_array_almost_equal() | Compares two arrays element-wise for equality within a certain tolerance. Useful for testing output correctness in numerical operations. |

| unittest.TestCase | A base class in the unittest module for creating test cases. Used to structure and organize unit tests in Python. |

| np.array() | Creates an array in NumPy. Arrays are used to represent inputs, weights, and biases in the fully connected layer calculations. |

| torch.matmul() | PyTorch's function for matrix multiplication, crucial for calculating outputs in neural networks. |

| unittest.main() | Runs all the test cases defined in the script. Essential for validating the accuracy and reliability of the implemented solutions. |

Breaking Down Fully Connected Layer Calculations

The scripts provided aim to demystify how a node in a fully connected (FC) layer of a CNN processes data from the previous layer. These layers connect every input to every node using weighted links and biases, making them essential for tasks like image classification. The first script calculates the output for a single node using NumPy. By multiplying the input values with their corresponding weights and adding the bias, the node output is obtained. This output is then passed through an activation function (e.g., ReLU) to introduce non-linearity. For example, imagine an image's pixel values as inputs; the weights might represent learned filters that extract meaningful features from the image. 🖼️

The second script generalizes the calculation for multiple nodes. It uses matrix multiplication, where the weights are represented as a 2D matrix and the inputs as a vector. This efficient approach allows simultaneous computation for all nodes in the layer. By adding biases and applying the ReLU activation function, the final outputs of the layer are produced. This method is highly scalable and is a core operation in modern deep learning frameworks. For instance, in a face recognition system, this process could help determine whether a detected shape resembles a human face. 😊

For those working with deep learning libraries like PyTorch, the third script demonstrates how to use tensors and built-in functions to achieve the same calculations. PyTorch’s flexibility and built-in optimizations make it ideal for building and training neural networks. The script shows how to define inputs, weights, and biases as tensors and perform matrix multiplication using the torch.matmul() function. This is particularly useful for creating end-to-end pipelines for training CNNs on large datasets, such as identifying animals in wildlife photographs.

Finally, the unit tests script ensures that all implementations work correctly under various conditions. Using the unittest library, it verifies the numerical accuracy of the calculations and confirms that the outputs meet expected results. This step is crucial for debugging and ensuring reliability, especially when deploying CNNs in real-world applications like medical image analysis. With these scripts and explanations, you now have a clear path to understanding and implementing FC layers in CNNs confidently. 🚀

Understanding the Calculation of a Node in the Fully Connected Layer

Python-based solution leveraging NumPy for matrix calculations

# Import necessary libraryimport numpy as np# Define inputs to the fully connected layer (e.g., from previous convolutional layers)inputs = np.array([0.5, 0.8, 0.2]) # Example inputs# Define weights for the first node in the hidden layerweights_node1 = np.array([0.4, 0.7, 0.3])# Define bias for the first nodebias_node1 = 0.1# Calculate the output for node 1node1_output = np.dot(inputs, weights_node1) + bias_node1# Apply an activation function (e.g., ReLU)node1_output = max(0, node1_output)# Print the resultprint(f"Output of Node 1: {node1_output}")

Generalizing Node Calculation in Fully Connected Layers

Python-based solution for calculating all nodes in a hidden layer

# Import necessary libraryimport numpy as np# Define inputs to the fully connected layerinputs = np.array([0.5, 0.8, 0.2])# Define weights matrix (rows: nodes, columns: inputs)weights = np.array([[0.4, 0.7, 0.3], # Node 1[0.2, 0.9, 0.5]]) # Node 2# Define bias for each nodebiases = np.array([0.1, 0.2])# Calculate outputs for all nodesoutputs = np.dot(weights, inputs) + biases# Apply activation function (e.g., ReLU)outputs = np.maximum(0, outputs)# Print the resultsprint(f"Outputs of Hidden Layer: {outputs}")

Using PyTorch for Node Calculation in a Fully Connected Layer

Implementation with PyTorch for deep learning enthusiasts

# Import PyTorchimport torch# Define inputs as a tensorinputs = torch.tensor([0.5, 0.8, 0.2])# Define weights and biasesweights = torch.tensor([[0.4, 0.7, 0.3], # Node 1[0.2, 0.9, 0.5]]) # Node 2biases = torch.tensor([0.1, 0.2])# Calculate outputsoutputs = torch.matmul(weights, inputs) + biases# Apply ReLU activationoutputs = torch.nn.functional.relu(outputs)# Print resultsprint(f"Outputs of Hidden Layer: {outputs}")

Test Each Solution with Unit Tests

Python-based unit tests to ensure correctness of implementations

# Import unittest libraryimport unittest# Define the test case classclass TestNodeCalculation(unittest.TestCase):def test_single_node(self):inputs = np.array([0.5, 0.8, 0.2])weights_node1 = np.array([0.4, 0.7, 0.3])bias_node1 = 0.1expected_output = max(0, np.dot(inputs, weights_node1) + bias_node1)self.assertEqual(expected_output, 0.86)def test_multiple_nodes(self):inputs = np.array([0.5, 0.8, 0.2])weights = np.array([[0.4, 0.7, 0.3],[0.2, 0.9, 0.5]])biases = np.array([0.1, 0.2])expected_outputs = np.maximum(0, np.dot(weights, inputs) + biases)np.testing.assert_array_almost_equal(expected_outputs, np.array([0.86, 0.98]))# Run the testsif __name__ == "__main__":unittest.main()

Unraveling the Importance of Fully Connected Layers in CNNs

Fully connected (FC) layers play a pivotal role in transforming extracted features from convolutional layers into final predictions. They work by connecting every input to every output, providing a dense mapping of learned features. Unlike convolutional layers that focus on spatial hierarchies, FC layers aggregate this information to make decisions like identifying objects in an image. For instance, in a self-driving car's image recognition system, the FC layer might determine whether a detected object is a pedestrian or a street sign. 🚗

One aspect that sets FC layers apart is their ability to generalize patterns learned during training. This property is crucial when dealing with unseen data. Each node in the layer represents a unique combination of weights and biases, enabling it to specialize in recognizing specific patterns or classes. This is why the structure of FC layers often determines the overall model’s accuracy. For example, in a handwritten digit recognition model, the FC layer consolidates pixel patterns into numerical predictions (0-9). ✍️

While FC layers are computationally expensive due to their dense connections, they remain vital for tasks requiring detailed classification. Modern techniques like dropout are used to optimize their performance by preventing overfitting. By reducing the number of active nodes during training, dropout ensures that the FC layer learns robust features, making it indispensable in applications like facial recognition and medical image diagnostics.

Common Questions About Fully Connected Layers

- What is the main function of a fully connected layer in CNNs?

- The FC layer connects all inputs to outputs, aggregating features for final predictions. It is key to transforming feature maps into actionable results.

- How are weights and biases initialized in FC layers?

- Weights are often initialized randomly or using techniques like Xavier initialization, while biases usually start at zero for simplicity.

- How does ReLU activation improve FC layer performance?

- ReLU applies non-linearity by setting negative outputs to zero. It prevents vanishing gradients, making the model converge faster.

- Can dropout be applied to FC layers?

- Yes, dropout randomly disables nodes during training, enhancing model generalization and reducing overfitting.

- What makes FC layers different from convolutional layers?

- While convolutional layers extract spatial features, FC layers aggregate these features into a dense format for classification.

Key Takeaways on Fully Connected Layers

The fully connected layer consolidates learned features into actionable predictions, serving as the final decision-making step in neural networks. By understanding how each node is calculated, users gain confidence in designing and optimizing CNN architectures for tasks like object detection and classification.

Practical examples, such as image recognition in autonomous vehicles or facial identification, showcase the significance of FC layers. With the right approach, incorporating optimization methods ensures robust and accurate models that adapt well to unseen data. Mastery of this concept unlocks deeper exploration into artificial intelligence. 😊

Sources and References

- Detailed explanation on fully connected layers in CNNs sourced from Machine Learning Mastery .

- Comprehensive guide to activation functions and their applications retrieved from Analytics Vidhya .

- Insights into dropout and optimization techniques for neural networks found at DeepAI .

- Understanding weights and biases in neural networks from Towards Data Science .

- Use of ReLU activation functions in PyTorch sourced from PyTorch Documentation .