Streamlining Your Development Workflow with Docker Profiles

Managing background tasks during development can be tricky, especially when you're juggling multiple services like Celery, CeleryBeat, Flower, and FastAPI. If you're using a devcontainer setup in Visual Studio Code, you might find it overwhelming to start all services at once. This is particularly challenging when you're working with paid APIs that you don’t need active during development.

Imagine a scenario where your Celery workers automatically connect to an expensive API every five minutes, even though you only need them occasionally. This not only wastes resources but also complicates debugging and workflow optimization. The good news is that Docker profiles can simplify this process.

Docker profiles allow you to selectively run specific containers based on your current task. For example, you could start with only essential services like Redis and Postgres, and later spin up Celery and Flower as needed. This approach ensures your development environment is both flexible and cost-effective. 🚀

In this guide, we’ll walk through a practical setup for managing these services in a devcontainer. You'll learn how to avoid common pitfalls and enable smooth task execution without breaking your workflow. By the end, you'll have a tailored setup ready to support your unique development needs. Let's dive in! 🌟

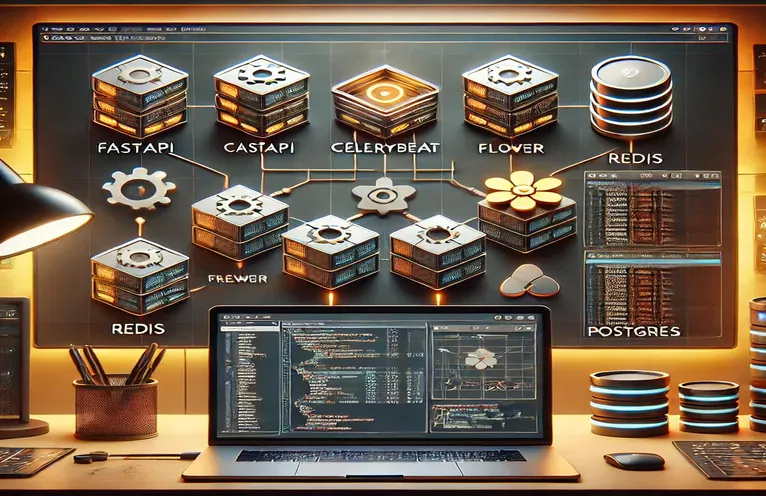

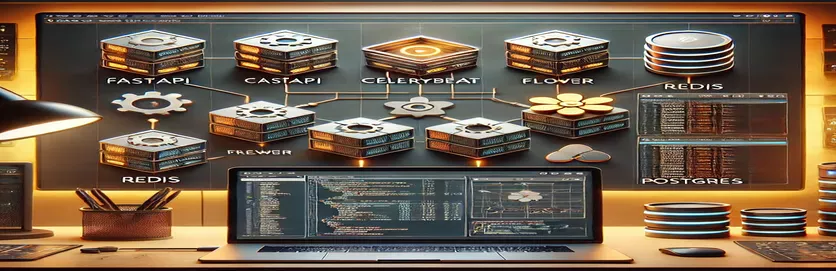

Dynamic Docker Setup for FastAPI, Celery, and Related Services

This script uses Python with Docker Compose to configure dynamic service management in a development environment. Services are set up to run only when needed, optimizing resource usage.

# Docker Compose file with profiles for selective service activationversion: '3.8'services:trader:build:context: ..dockerfile: .devcontainer/Dockerfilevolumes:- ../:/app:cached- ~/.ssh:/home/user/.ssh:ro- ~/.gitconfig:/home/user/.gitconfig:cachedcommand: sleep infinityenvironment:- AGENT_CACHE_REDIS_HOST=redis- DB_URL=postgresql://myuser:mypassword@postgres:5432/dbprofiles:- defaultcelery:build:context: ..dockerfile: .devcontainer/Dockerfilevolumes:- ../:/app:cachedcommand: celery -A src.celery worker --loglevel=debugenvironment:- AGENT_CACHE_REDIS_HOST=redis- DB_URL=postgresql://myuser:mypassword@postgres:5432/dbprofiles:- optionalredis:image: redis:latestnetworks:- trader-networkprofiles:- default

Optimizing Celery Startup with a Python Script

This Python script automates the startup of Celery services based on user action. It uses Docker SDK for Python to control containers.

import dockerdef start_optional_services():client = docker.from_env()services = ['celery', 'celerybeat', 'flower']for service in services:try:container = client.containers.get(service)if container.status != 'running':container.start()print(f"Started {service}")else:print(f"{service} is already running")except docker.errors.NotFound:print(f"Service {service} not found")if __name__ == "__main__":start_optional_services()

Unit Testing the Celery Workflow

This Python test script uses Pytest to validate the Celery task execution environment, ensuring modularity and correctness.

import pytestfrom celery import Celery@pytest.fixturedef celery_app():return Celery('test', broker='redis://localhost:6379/0')def test_task_execution(celery_app):@celery_app.taskdef add(x, y):return x + yresult = add.delay(2, 3)assert result.get(timeout=5) == 5

Optimizing Development with Selective Container Management

When working on a project like a FastAPI application that uses background task managers such as Celery and CeleryBeat, selectively managing container lifecycles becomes crucial. This approach allows you to keep development lightweight while focusing on core features. For instance, during development, you might only need the FastAPI server and database containers active, reserving Celery workers for specific testing scenarios. Leveraging Docker Compose profiles helps achieve this by letting you group containers into categories like "default" and "optional."

Another critical aspect is ensuring that additional services like Flower (for monitoring Celery) only start when explicitly required. This reduces unnecessary overhead and avoids potentially expensive operations, such as interacting with external APIs during routine development. To implement this, developers can use Docker SDK scripts or pre-configure commands within the container's lifecycle hooks. This technique ensures efficient resource utilization without compromising functionality. For example, imagine debugging a failing task: you can spin up Celery workers and Flower temporarily for just that purpose. 🌟

Lastly, testing the entire setup with unit tests ensures that these optimizations don't lead to regressions. Writing automated tests to validate Celery tasks, Redis connections, or database integrations saves time and effort. These tests can simulate real-world scenarios, such as queueing tasks and verifying their results. By combining Docker profiles, automated scripting, and robust testing, developers can maintain an agile and effective workflow while scaling efficiently when the need arises. 🚀

Frequently Asked Questions About Docker and Celery Integration

- What is the purpose of Docker Compose profiles?

- They allow grouping services into logical categories, enabling selective startup of containers. For example, the "default" profile can include essential services like FastAPI, while the "optional" profile includes Celery workers.

- How do I start a specific service from an optional profile?

- Use the command docker compose --profile optional up celery to start only the containers in the "optional" profile.

- What is the advantage of using Docker SDK for managing containers?

- Docker SDK enables programmatic control over containers, such as starting or stopping specific services dynamically, through Python scripts.

- How can I monitor Celery tasks in real-time?

- You can use Flower, a web-based monitoring tool. Start it using celery -A app flower to view task progress and logs via a web interface.

- What’s the benefit of running Celery workers only on demand?

- It saves resources and avoids unnecessary API calls. For instance, you can delay starting workers until specific integration tests need background task processing.

Efficient Container Management for Developers

Efficiently managing development resources is crucial for maintaining productivity. By selectively starting services like Celery and Flower, developers can focus on building features without unnecessary distractions. Docker Compose profiles simplify this process, ensuring resources are used wisely.

Scripts and testing frameworks further enhance this setup by providing dynamic service activation and validation. Combined, these tools offer a flexible and robust environment, allowing developers to debug, test, and deploy their FastAPI applications with ease. 🌟

Sources and References for Containerized Application Setup

- Insights on using Docker Compose profiles for selective service activation were referenced from Docker Documentation .

- Practical guidelines for Celery and FastAPI integration were based on the tutorials available at Celery Project .

- Steps to optimize development with Flower for task monitoring were inspired by articles on Flower Documentation .

- Details about the usage of Python Docker SDK for dynamic container management were obtained from Docker SDK for Python .

- Testing and debugging methodologies for Celery tasks were reviewed from Pytest Django Guide .