Challenges of Integrating Spark and Kafka in a Dockerized Environment

Have you ever faced a connectivity issue while integrating a Kafka Broker into a Spark Cluster within a Docker setup? You're not alone! Many developers encounter hurdles when setting up the communication between these two powerful tools. 🛠️

Recently, I embarked on enhancing my Spark Cluster by adding a Kafka broker to streamline real-time data processing. However, I hit a roadblock with persistent connection timeouts and DNS resolution errors, which turned the process into a troubleshooting marathon. 😅

These issues stemmed from misconfigured settings in Docker Compose and Spark's Kafka-related configurations. Despite following several guides and tweaking numerous parameters, the elusive "broker may not be available" message persisted, leaving me puzzled and frustrated.

In this article, I'll share my experience and offer practical steps to resolve connectivity challenges between Spark workers and Kafka brokers in a Docker environment. Along the way, you'll learn tips and tricks to avoid these pitfalls and ensure a seamless integration. Let’s dive in! 🚀

| Command | Example of Use |

|---|---|

| from_json() | This Spark SQL function parses a JSON string and creates a structured data object. In the example, it is used to deserialize Kafka messages into structured data. |

| StructType() | Defines a schema for structured data processing. It is particularly useful for defining the expected format of Kafka messages. |

| .readStream | Initiates a streaming DataFrame in Spark, allowing for continuous data ingestion from Kafka or other streaming sources. |

| writeStream | Defines the output mode and sink for a Spark Structured Streaming query. Here, it specifies writing to the console in append mode. |

| bootstrap_servers | A Kafka configuration parameter that specifies the address of the Kafka broker. Critical for Spark and Kafka communication. |

| auto_offset_reset | A Kafka consumer setting that determines where to start reading messages when no prior offset exists. The "earliest" option starts from the oldest message. |

| KAFKA_ADVERTISED_LISTENERS | A Docker Kafka configuration environment variable. It specifies the advertised addresses for Kafka clients, ensuring proper communication within and outside the Docker network. |

| KAFKA_LISTENERS | Configures the network interfaces on which the Kafka broker listens for incoming connections. Used here for separating internal and external communication. |

| KAFKA_LISTENER_SECURITY_PROTOCOL_MAP | Defines the security protocols for different Kafka listeners. It maps listener names to their respective protocols, such as PLAINTEXT in this case. |

| .awaitTermination() | A Spark Structured Streaming method that blocks the execution of the script until the streaming query is terminated, ensuring that the stream runs continuously. |

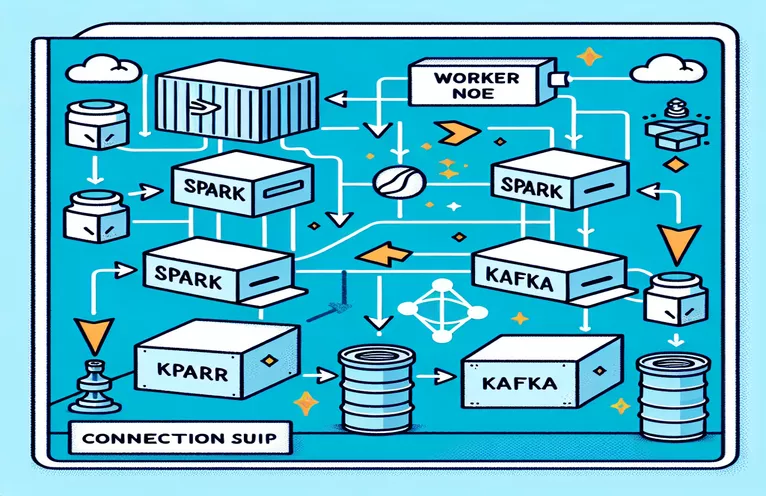

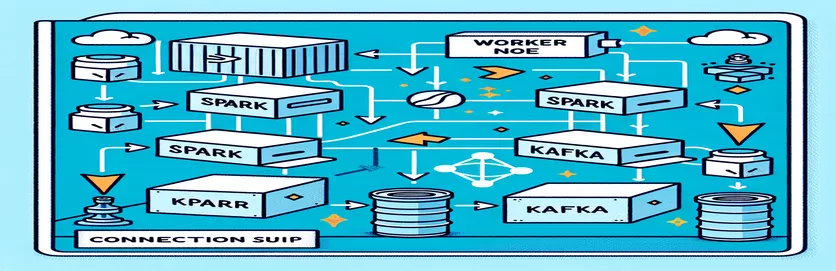

Understanding Spark and Kafka Integration in Docker

The first script focuses on establishing a connection between a Spark Worker and a Kafka Broker. By using Spark's Structured Streaming API, the script reads real-time data from a Kafka topic. It begins with initializing a Spark session and configuring it with the required Kafka package. This is crucial as it provides the necessary dependency for Spark to communicate with Kafka seamlessly. An example of this dependency is the `org.apache.spark:spark-sql-kafka` package, which ensures compatibility between Spark and Kafka in a Docker environment.

To handle Kafka messages, the script defines a schema using `StructType`. This schema ensures that the incoming messages are correctly parsed and structured. Real-world scenarios often involve handling JSON data from Kafka. For instance, imagine a cryptocurrency monitoring system where messages containing price updates are sent to Kafka. Parsing these messages into a readable format makes it easier to process and analyze data for trend prediction. 🪙

The Docker Compose configuration plays a pivotal role in resolving connectivity issues. The `KAFKA_ADVERTISED_LISTENERS` and `KAFKA_LISTENERS` settings are adjusted to differentiate internal and external communication within the Docker network. This ensures that services running on the same Docker network, such as Spark and Kafka, can interact without DNS resolution problems. For example, mapping `INSIDE://kafka:9093` allows internal containers to access Kafka, while `OUTSIDE://localhost:9093` enables external applications like monitoring tools to connect.

The second script demonstrates how to use a Python `KafkaConsumer` for testing the Kafka connection. This is a simple yet effective approach to ensure that the Kafka broker is functioning correctly. By consuming messages from the specified topic, you can verify if the data flow is uninterrupted. Consider an application where a user wants to track stock market data. Testing the connection using this consumer script ensures that no critical updates are missed due to configuration errors. With these tools, you can confidently deploy robust systems for real-time data processing! 🚀

Handling Connectivity Issues Between Spark Worker and Kafka Broker

Solution 1: Using Python for debugging and resolving connection issues in Spark and Kafka with Docker

# Import necessary modulesfrom pyspark.sql import SparkSessionfrom pyspark.sql.types import StructType, StringTypefrom pyspark.sql.functions import from_json, col# Initialize Spark session with Kafka dependencyspark = SparkSession.builder \.appName("KafkaDebugReader") \.config("spark.jars.packages", "org.apache.spark:spark-sql-kafka-0-10_2.12:3.5.0") \.getOrCreate()# Define schema for Kafka messageschema = StructType().add("message", StringType())# Set up Kafka source for streaming datadf = spark.readStream \.format("kafka") \.option("kafka.bootstrap.servers", "localhost:9093") \.option("subscribe", "crypto_topic") \.option("startingOffsets", "earliest") \.load()# Parse Kafka messagemessages = df.select(from_json(col("value").cast("string"), schema).alias("data")) \.select("data.message")# Output data to consolequery = messages.writeStream \.outputMode("append") \.format("console") \.start()query.awaitTermination()

Debugging DNS Resolution Issues in Dockerized Kafka

Solution 2: Modifying Docker Compose configuration for proper DNS resolution

version: '3.8'services:kafka:image: wurstmeister/kafkacontainer_name: kafkaports:- "9093:9093"environment:KAFKA_ADVERTISED_LISTENERS: INSIDE://kafka:9093,OUTSIDE://localhost:9093KAFKA_LISTENERS: INSIDE://:9093,OUTSIDE://0.0.0.0:9093KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXTnetworks:- my_networkzookeeper:image: zookeepercontainer_name: zookeeperports:- "2181:2181"networks:- my_networknetworks:my_network:driver: bridge

Testing Kafka Consumer Connection

Solution 3: Python Kafka Consumer for testing the connection

# Import KafkaConsumer from Kafka libraryfrom kafka import KafkaConsumer# Create a Kafka Consumer instanceconsumer = KafkaConsumer('crypto_topic',bootstrap_servers='kafka:9093',auto_offset_reset='earliest',enable_auto_commit=False,group_id='api_data')# Poll messages from Kafka topicfor message in consumer:print(f"Received message: {message.value.decode('utf-8')}")# Ensure to close the consumerconsumer.close()

Optimizing Kafka and Spark in a Dockerized Environment

A critical aspect of ensuring smooth communication between Kafka Brokers and Spark Workers in Docker is configuring network settings effectively. Docker containers operate in isolated environments, often causing DNS resolution issues when services need to interact. To address this, you can leverage Docker Compose's network configuration options. For instance, defining a custom network like `my_network` and linking services ensures that containers recognize each other by name rather than IP, which simplifies setup and avoids common pitfalls.

Another essential consideration is optimizing Kafka's listener configurations. By specifying `KAFKA_ADVERTISED_LISTENERS` and `KAFKA_LISTENERS` in your Docker Compose file, you allow Kafka to advertise appropriate addresses to its clients. This differentiation between internal and external listeners resolves conflicts, particularly when Spark Workers attempt to connect from outside the Docker network. A real-life example of this is a monitoring dashboard querying Kafka data from a host machine, requiring a distinct external listener for access. 🔧

Finally, implementing robust error handling in your Spark applications is crucial. For example, leveraging retries and fallbacks within the Kafka configuration can handle temporary connectivity issues gracefully. Adding `.option("kafka.consumer.max.poll.records", "500")` ensures efficient data retrieval, even under heavy loads. Imagine a production-grade application tracking stock prices in real-time—having fail-safes in place ensures uninterrupted data flow even during network hiccups. These techniques together form the backbone of a reliable data processing pipeline. 🚀

Common Questions About Spark and Kafka in Docker

- What is the purpose of KAFKA_ADVERTISED_LISTENERS?

- It specifies the advertised addresses for Kafka clients to connect, ensuring proper communication in and outside the Docker network.

- How do you define a custom network in Docker Compose?

- You can add a network under the networks key and include it in services, like `networks: my_network`.

- Why does DNS resolution fail in Docker containers?

- Containers may not recognize each other by name unless they are part of the same Docker network, which links their DNS.

- What is the role of .option("subscribe", "topic") in Spark Streaming?

- It subscribes the Spark Structured Streaming DataFrame to the specified Kafka topic for real-time data ingestion.

- How can retries improve Kafka-Spark integration?

- Retries in configurations, such as max.poll.records, help handle transient errors and ensure consistent data processing.

Simplifying Spark and Kafka Integration

Setting up Spark and Kafka in Docker can be complex, but with the right configurations, it becomes manageable. Focus on listener settings and network configurations to avoid connectivity issues. Ensure all components like Zookeeper and Kafka are well-synced for optimal performance.

Real-world use cases, such as monitoring financial data or IoT streams, highlight the importance of robust configurations. The tools and scripts shared here equip you with the knowledge to overcome common hurdles and build efficient, real-time data pipelines. 🛠️

Sources and References

- This article was informed by the official Apache Spark Kafka Integration Documentation , providing detailed insights into configuration and usage.

- Docker networking best practices were referenced from the Docker Networking Documentation to ensure accurate and reliable container communication setups.

- Practical examples and additional Kafka settings were adapted from the Wurstmeister Kafka Docker GitHub Repository .